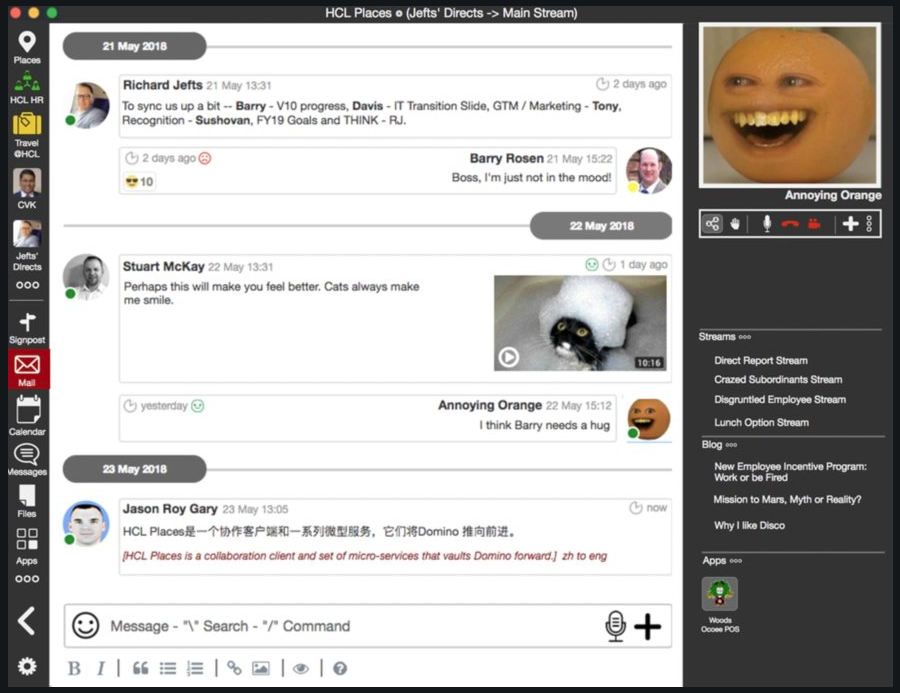

HCL was kind enough to invite me to their CWP factory tour themed from the story Charlie and the Chocolate factory, held in Chelmsford, Massachussetts on July 11-12 2018.

We already knew from Engage and DNUG that Richard Jefts has no qualms in donning a silly costume, but seeing Jason Gary as an Oompa-Loompa was truly memorable. We now have photos and a means to blackmail them.

Many thanks to them and the HCL Staff for organising the event, it was definitely worth the trip.

Letting the developers loose.

Over the course of two days, the HCL Staff, the core developers of Domino 10, showed us what they had been working on. We had unprecedented access to the core team, and the interactions – on the first day, a series of presentations/workshops and on the second day, an impromptu ‘Ask the developers’ session – were very fruitful. And the communication was two-way too – on numerous occasions, when we made requests, the developers were surprised or suddenly understood where we were coming from.

I had a sense of the developers being let loose after years of being held back, and it’s obvious that they are completely aware of the pain points that external developers and business partners have had, and are happy to have the opportunity and resources for actively solving them.

don’t ask about the chicken.

HCL’s strategy

The penny dropped a number of times as I suddenly started to understand exactly what was coming our way, and how the different improvements and new developers fit together in the broader HCL strategy.

Business: play to Domino’s strengths: easy AppDev and secure, low-maintenance, on-premises infrastructure

We had the pleasure of listening to Darren Oberst, who heads HCL’s PCP division and explained to us the logic of HCL’s investment in the IP of Domino/Notes. And it’s consistent. HCL has recognised that not all customers are cloud-frenzied and that having one’s own infrastructure, on-premises, is valuable when data privacy and control is important. This is especially important in the German and Swiss markets.

It makes a refreshing change to IBM’s mantra of ‘cloud first’ which left Domino sticking out as a sore thumb in IBM’s portfolio.

HCL are making the web-based mail client, Verse, work well on-premises, for instance without the need of a separate IBM Connections environment.

There is a sweet spot for Domino as a ‘one-server-does-all‘ for the small and medium businesses which was largely ignored by IBM in the past.

I’m hoping that, with some judicious investments in modernising Domino, we business partners can start offering Domino to completely new customers, instead of restricting ourselves to customers who already have an installation.

Increase robustness of the Domino server

There is a team called ‘Total Cost of Ownership’ whose purpose I didn’t initially understand. The penny dropped as I realised that the work is to increase the robustness of the Domino server, to make it an attractive investment for SMBs also, who don’t have the size to have their own dedicated staff but still want an on-premise infrastructure. In short, to make Domino an ubiquitous offering, which is great!

Out of the many improvements that were announced, the ones on the 64GB database limit was very welcome, as was the commitment to breathing some life into Sametime, including getting rid of the monstrous Websphere database in the background. My jaw dropped as one of the casual comments was that the Search was going to be upgraded so that the indexes get immediately updated with new entries. This has been such a pain point in the past explaining to customers why they can’t find the information they’ve just inputted, and I wasn’t expecting a resolution any time soon.

Impressive, also, were the self-repairing logic that is going to be included. Fixups happening automatically, automatic checks that replicas are correctly distributed, and automated recoveries of corrupt databases. This goes completely in the direction of making the domino server so reliable that it can be offered to SMBs without qualms.

Node.js opens up Domino to modern web developers.

It took me a while to get this. The essential point is that with the new integration with node.js, modern web developers do not need to have any Domino-specific knowledge to start coding with Domino as a back-end NoSQL server. All this new stuff is not aimed at hard-core Domino developers, who already know all the hoops and workarounds needed to get Domino speaking to other systems. It’s ‘code with Domino without opening Domino Designer’.

The DominoDB module will be available as an npm package. Download it, require it, and you can create a connection to a database and start making CRUD operations against it. To complement this (and this was a welcome surprise here) the HCL Team are working on a SQL-Like query language (Domino General Query Facility) which will return JavaScript Arrays of documents. This promises higher query speeds and will be available from any of the languages, and can be run by any of the Domino languages.

Another pain point that was addressed was Identity and Access Management (IAM) which will follow OIDC/OAuth standards, and will be able to be accessed as a service.

Hackathon

Three teams participated in HCL’s Hackathon. (I can’t yet say what one of the teams did)

‘web app start’ application

Graham Acres, Richard Jefts, Bernd Gewehr, Jason Gary, Christian Güdemann, Thilo Volprich, Thomas Hampel

This team created in no time at all a ‘web app start application’ that locally stores credentials PWA Application that could connect to a Domino Server once and once only and then securely locally stores the credentials and allows to redirect to other domino-based web apps. Wonderful, and a tour de force in the time available.

Enabling Domino Compatibility for modern apps built with Play Framework

Richard Jefts, Dan Dumont, Andrew Magerman, Ellen Feaheny, Jason Gary, Steve Nikopoulos, Dave Delay,

Our team pursued a more conceptional idea (we didn’t code anything).

Ellen Feaheny leads AppFusions, a company that sells IAAS (Integration as a service) products, and came up with an idea of trying to leverage Domino with apps built with the Play Framework.

Play, by Lightbend, is used by enterprise corps for micro-services architectures using Javascript, Scala, or Java. It is especially good for apps that require large scaling design from the get go.

We kidnapped the HCL Team doing the node.js integration and brainstormed with them on how this could be implemented, sorting through the high-level requirements.

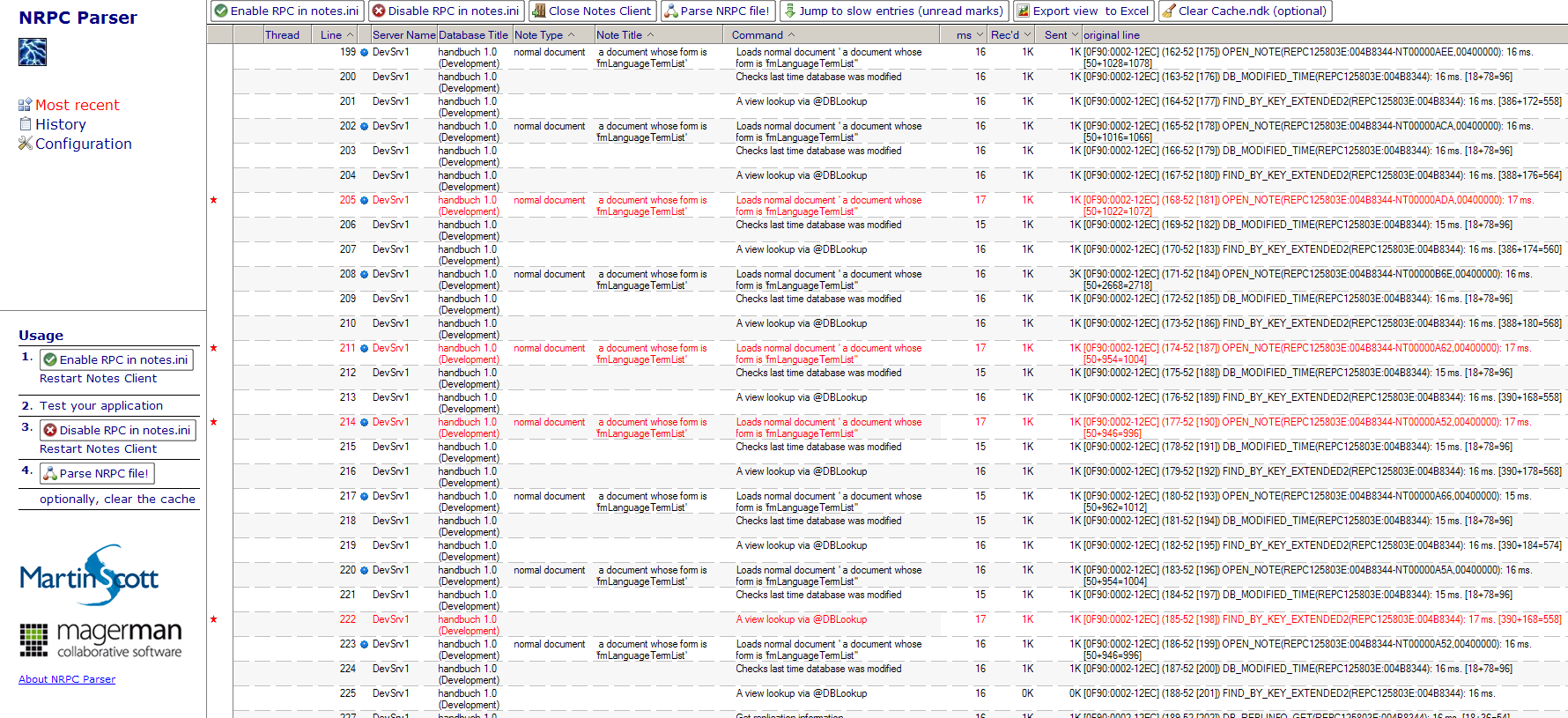

How does the gRPC protocol work?

Steve Nikopoulos, and Brenton Chasse spent a long time holding my hand and explaining to me slowly how the gRPC protocol works.

As far as I’ve understood:

- It’s a very efficient protocol between two machines to deliver remote procedure calls, i.e. calls to execute something on a remote machine, using commands that look local to the caller.

- The transport is done over HTTP 2.0 (so there’s already a speed advantage because the connection remains open).

- The messages sent are small, because they are just within the TCP envelope of the HTTP call, and because the format of the message is predetermined and the declarative part of the message is kept to a minimum through diverse tricks.

- Crucially, messages can contain other messages, so you have an out-of-the-box possibility of sending a tree of nodes, which I found very satisfying.

Think of it of as a very clever lossless compression for messages which have a predetermined format (for instance, ‘bulk update documents’, or ‘delete this document’).

The starting point for defining the protocol is a file called a .proto file which defines very exactly the data structure of the messages. (see grpc.io). Once these are defined, you use a protocol buffer compiler to generate data access classes in any of the supported languages (all the favourites are already there).

Put it another way: gRPC comes with tools that enable you to create ‘stubs’ in any computer language that you want that will be able to ‘compress’ and ‘decompress’ the message. As an analogy, think of the stubs that were generated when building SOAP interfaces.

Our idea:

If HCL makes the .proto file for the gRPC protocol public, then anybody can create an interface to the gRPC Server which will come with Domino.

This was just a concept, and there are still some open questions, i.e. who supports the solution in case something goes wrong, how does one organise versioning, how does one ensure compatibility between different versions of the protocol…

Andrew Davis told us that the HCL Team loved the idea, and that idea was rewarded by the first prize! In fact, Andrew even committed in front of the audience to work with AppFusions (and others who wish to get involved) to see how this could be made real, sooner than later.

Conclusion

Domino is going places. The CWP Factory Tour was an exhilarating meet-up of the core Development team and the core Domino community, with very productive exchanges of ideas. I haven’t been this optimistic about the future of Domino/Notes in years. Watch this space!

Please call me if you want some help or advice about the future of Domino!

The lucky attendees

The lucky attendees